Hello!

For about five years, this newsletter was called Death Letter Reds. I used it to post school essays I’d enjoyed writing, the occasional piece of art/music analysis and a couple of screeds against the Liberal Party. It has (mostly) been archived as a previous chapter in my life, and that version of my writing persona will be semi-dearly missed.

But life moves on, and my writing style has become more analytical and less sweary. In the past year, I’ve…

Had two papers on EU-Southeast Asian trade, an op-ed and a policy brief, published during a three-month stint at the European Institute of Asian Studies.

Co-written a peer-reviewed article on Thai fishery legislation for the East Asia Forum.

Interviewed the fantastic Shiloh Babbington, the New Zealand co-lead for the Indigenous Research Network, on behalf of the APEC Secretariat.

Whilst I’m very proud of this work and thank every editor and co-contributor who has taken a chance on me, an opportunity to write about the area I’ve felt most compelled to address in the previous two years has, so far, alluded me professionally. That being AI governance, particularly concerning the Indo-Pacific.

You might, understandably, roll your eyes at yet another Substack entering the fray on this topic. So allow me to state my case quickly —

Recently, I completed a week-long course on the Transformative Impact of AI from BlueDot Impact, an AI safety non-profit. While I enjoyed my time and would strongly recommend it, some of the views within the AI safety sphere about the existential/societal risk of AI have become codified far too quickly. These include:

The assumption that an “Intelligence Explosion,” where machines become unfathomably quick at self-improvement, is very likely.

The quasi-mythical assumptions about the eventual capabilities of ML systems.

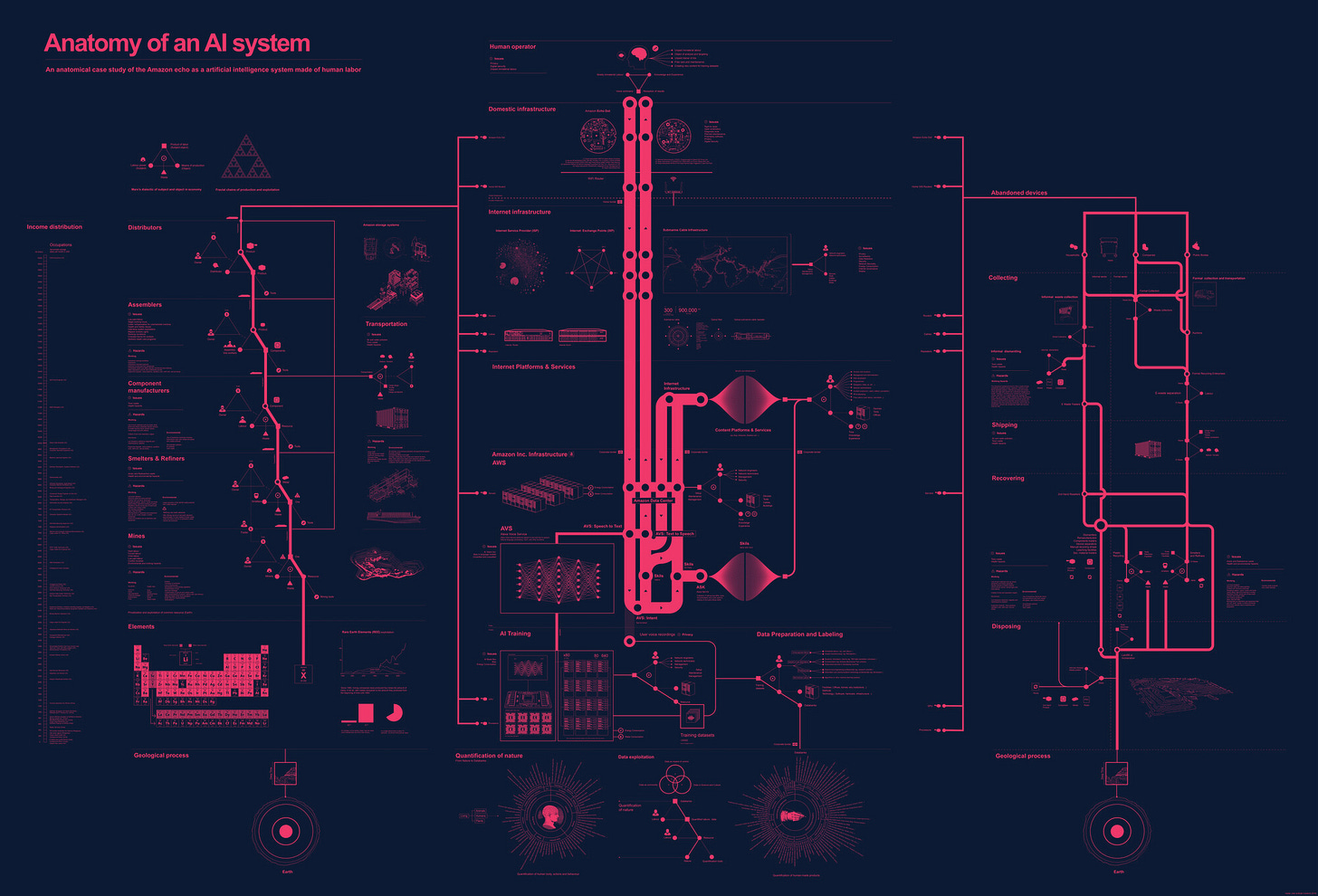

Ignorance of the supply chain complexity for constructing and maintaining hyperscale data centres.

A disregard for the complexity and fragility of the dependencies upholding modern digital infrastructure.

Justifying these points will be the overarching goal of Polyphase South, so bear with my lack of elaboration. My broader point is that the combination of these views results in an AI safety landscape that relegates all the influence to the hundreds/thousands of engineers developing these technologies rather than the millions of people, from rare earth mineral miners to ICANN key holders, that can and should materially impact the speed, scale and deployment of this technology.

The current debate results in powerlessness and nihilism for the many who unjustly feel that they cannot contribute and optimism driven by the warped techno-optimist noblesse oblige of the few who control the purse strings. Any small contribution we can make to stretch the AI safety debate a little larger is worthwhile.

I’m looking forward to uploading some articles soon.